The global decline in electric vehicle demand has hit Tesla hard, with reports of layoffs, staff cuts, and project pauses. But for a visionary like Elon Musk, adversity breeds opportunity, and his latest proposal is to transform Tesla’s fleet into a roaming cloud platform.

Instead of viewing each Tesla as just an electric car, Musk suggests leveraging their idle time to turn them into a cloud computing platform for complex AI computations, much like how Amazon utilizes unused server capacity for its AWS cloud service.

“There’s a potential here … when your car is not in use, [to] have it contribute its idle compute resources to a giant distributed computation,” Musk said. “If you imagine a future where we’ve got 100 million Teslas, and sometimes the car is just sitting there, then we can effectively utilize that [for AI computations].”

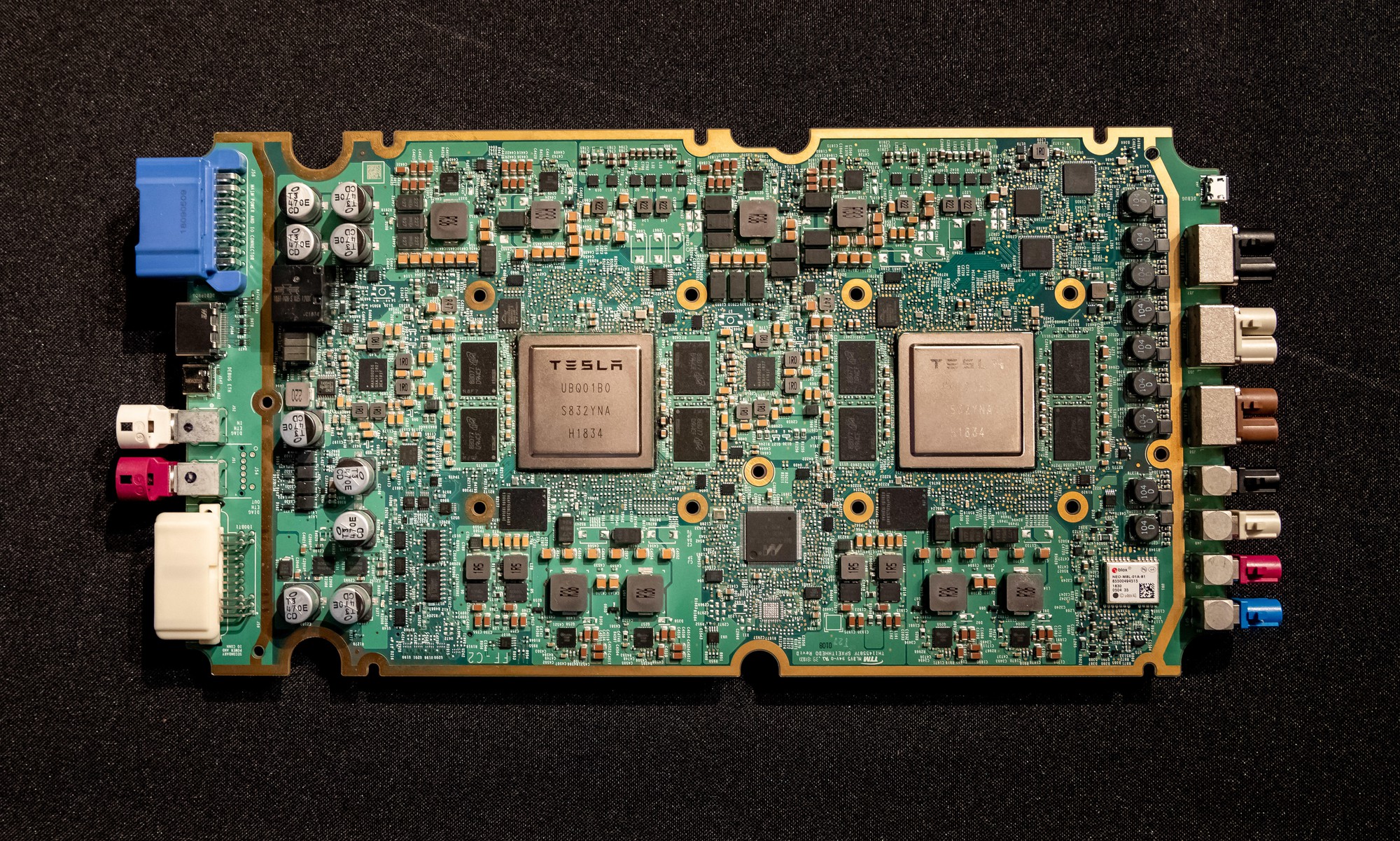

In essence, when you buy a Tesla, it becomes your asset. However, Musk wants to harness some of your car’s computing power when it’s not in use to run computations on the vehicle—part of the hardware that supports Tesla’s Full Self-Driving capability. This computing power would be equivalent to, or even surpass, that of NVIDIA’s GeForce RTX 4090 graphics card.

For Tesla, this scenario presents a mutually beneficial opportunity. The biggest advantage is that they don’t have to spend a dime on building or maintaining the hardware. As Musk made clear during the quarterly earnings call, “the capital costs are borne by the whole world.” This means that anyone who buys a Tesla is essentially paying for the hardware that the automaker intends to use for this purpose. Moreover, Tesla doesn’t need to maintain a centralized data center, where electricity and cooling costs would eat into their profits.

Tesla’s in-car control board with 2 AI processors

“Everyone would have a little piece of it. And they could, maybe, get a little dividend from it or something,” Musk said.

However, there are significant technical hurdles to this plan. While each Tesla’s computing power might match that of a GeForce RTX 4090, it pales in comparison to NVIDIA’s AI-dedicated GPUs like the A100 or H100, which cost anywhere from over $10,000 to $40,000 each. Aside from the performance gap, the lack of other critical features could make AI computations infeasible.

Moreover, the network connectivity is not homogeneous for tens of millions of dispersed electric vehicles worldwide. In contrast, data centers and massive AI servers are linked by special cables to maximize bandwidth and minimize latency—specifications that the Wi-Fi or wireless connections in current Teslas cannot match.

There are significant technical challenges to Musk’s idea

Nonetheless, to understand the potential of a distributed computing network, one needs to look no further than the destructive power of DDoS attacks—the closest analogy to Musk’s proposal. However, thus far, no one has harnessed this destructive power for more benevolent purposes.

Tesla is also not the first company to consider leveraging vehicles for a distributed computing network to run AI. In 2018, Uber floated a similar idea with its PetaStorm project, and a company called Petals has a similar concept. However, it’s clear that no one has successfully executed this vision yet.

It remains to be seen whether this is a genuinely groundbreaking business idea with the potential to generate new revenue streams for Tesla as it grapples with declining global demand, or if it’s just a story Musk is spinning to reassure shareholders about the company’s future. This takes on added significance as key shareholders deliberate on whether to claw back Musk’s $56 billion stock award.